Internet censorship and surveillance are not unlike the hardware and infrastructure of the Internet itself, in that most people know they are there without knowing much about how they function. How does one distinguish censorship from an instance of network failure, for example? Or where does a censorship device sit in relation to the path between client and server?

The parallel advances of authoritarianism in multiple countries and the continuing rise of technologies enabling Internet censorship have resulted in newer and more sophisticated Internet censorship practices by governments, and by the private companies that support government tech and the Internet backbone itself. But as methods evolve, longstanding gaps remain in how to track and measure the technologies behind Internet censorship.

Such operational questions are the focus of our recent research published at ACM CoNEXT 2022 and supported by the Open Technology Fund’s Information Controls Fellowship Program (ICFP). This work is a result of collaboration between researchers at the University of Michigan, Princeton University, and the Citizen Lab. Working in four countries—Azerbaijan, Belarus, Kazakhstan, and Russia—we sought to measure Internet censorship devices and improve understanding about where they are located in the network, how they behave, and what the underlying rules and software are in any given instance of Internet censorship.

The “black box” of censorship is a barrier to effective measurement and to the growth of shared knowledge and shared data for the community of researchers and technologists. Naturally, these gaps in measurement or a shared base of knowledge also hinder the work of researchers, policy-makers and advocates who seek to understand, regulate or circumvent government censorship and the tools that enable the overuse or abuse of censorship technologies.

To help address this knowledge gap, we are piloting rigorous, reusable, and scalable methods that can locate censorship devices and better understand their operation. Our research has been based on Censored Planet, a platform to collect and analyze measurements of Internet censorship. We developed a traceroute method called CenTrace and a fuzzing method called CenFuzz, and we drew on existing measurement resources such as the Open Observatory of Network Interference (OONI).

By identifying the location of censorship devices, which can encompass both the hardware and the software performing censorship operations, we can understand better how censorship is being done, what entity may be performing the censorship, and thus under what rules and jurisdictions they may be operating. This knowledge can also help to show, for example, who is sponsoring the activity, how coordinated the activity is, and whether all the outcomes are even intended.

Similarities in the behaviors of the systems being used can allow for a kind of “profiling” or fingerprinting of the devices performing the censorship. This can enable us to better map the specific manufacturers or users of these systems. It can also enable us to develop more detailed surveys, inventories, landscaping, and typologies of the methods in use.

In addition to understanding location, devices and device behaviors, we also sought to measure the details of how censorship is performed at the software and data level, and how the characteristics and similarities of the deployed software itself can help in identifying location, devices and other underlying information.

Our study finds that censorship devices are often deployed in an ISP either directly connected to the user or connected to residential ISPs, but in some cases we find censorship devices deployed in transit ISPs or at Internet exchange points upstream from most residential ISPs. Notably, we even find censorship devices deployed in one country (Russia) that were directly affecting traffic to and from another country (Kazakhstan), which has significant implications for censorship measurement research and for policymakers.

We identify 19 network devices manufactured by commercial vendors, such as Cisco and Fortinet, that are deployed to perform censorship in the four countries studied. Finally, we also observed and documented the apparent rules and triggers for these devices, and noted discrepancies in how differently configured HTTP and TLS protocol requests are parsed. This can help with identification of devices and with potential circumvention.

Just as the motivations for Internet censorship and surveillance vary among the entities performing the censorship, the techniques and the tools are diverse and continually expanding. It is important to note, for instance, that not all content monitoring is done to enable censorship, nor is all censorship intended to restrict expression: Some censorship activities are required simply to comply with intellectual property laws or other regional rules.

Our results show that there is a significant need for continued monitoring of these devices. You cannot address what you do not understand. Between the rapid growth in hardware, software and methods, and the corresponding growth of competing methods to detect and circumvent censors, the landscape is increasingly diverse and increasingly hard to measure.

Deeper knowledge about Internet censorship activity—particularly these under-investigated elements—will enable more effective action, foster a more knowledgeable, better-equipped research community, and engage regulators, legislators, funders, advocates, media, and even technologists and technology companies themselves. We hope that our initial work in the four countries studied can galvanize the growing community of practice in the measurement of Internet censorship devices across a range of implementations and country contexts.

Findings

Our findings vary across the four countries researched—Azerbaijan, Belarus, Kazakhstan, and Russia. Several other variables and limitations are explained in our full paper, Network Measurement Methods for Locating and Examining Censorship Devices, but we believe researchers, rulemakers, advocates and technologists can use these initial results to develop new measurements, conduct new experiments, and widen the knowledge-sharing community.

The Importance of Ethical Measurement

Ethical considerations remain a major point of contention in censorship studies, as censorship measurement involves prompting hosts inside censored countries to transmit data that triggers the censor. This carries at least a hypothetical risk that local authorities might retaliate against the host’s operator. Groups conducting censorship measurement should use careful consideration with regard to safety for end users and operators.

Thankfully, over the past decade, the research community has developed several guidelines and safeguards for censorship measurements, which we follow rigorously in our work. This includes purchasing vantage points only from commercial infrastructure services in countries under study, sending measurements only to Internet infrastructure destinations and not to end users, and sending measurements using deliberate tactics that will not cause disruption to networks.

1. Location of censorship devices: A key challenge for understanding Internet censorship is to discover where the censorship activity occurs—that is, where along the network path between client and server, and where geographically. Prior work has been constrained by the physical location of the servers used for measurement, which we show in our work yields an inaccurate estimate, and on measurements that only track known characteristics of specific censorship devices.

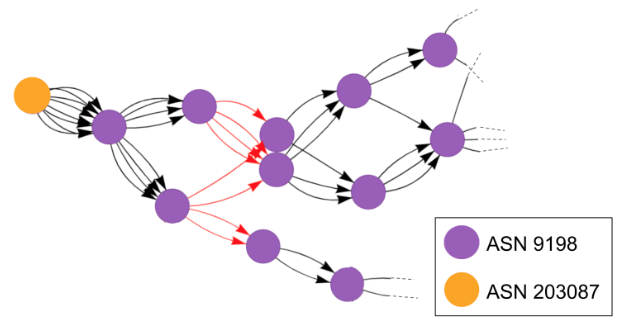

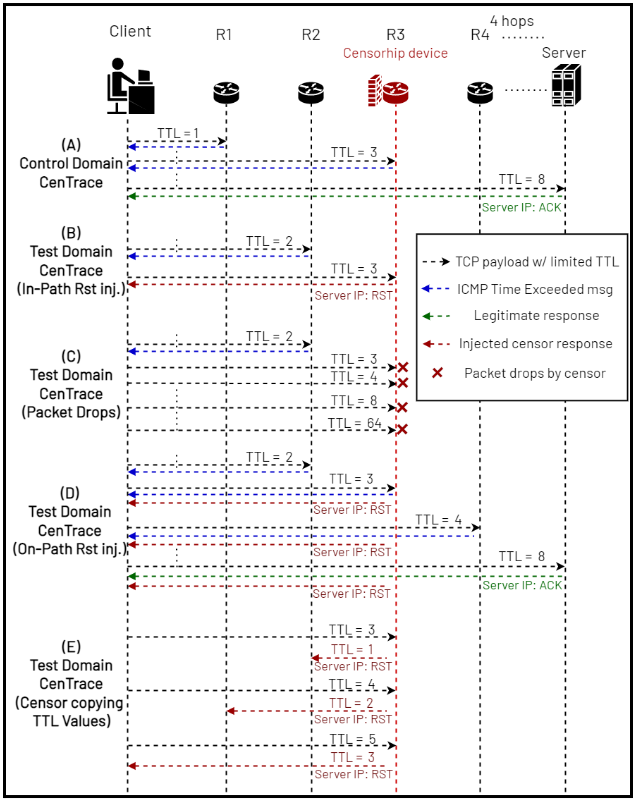

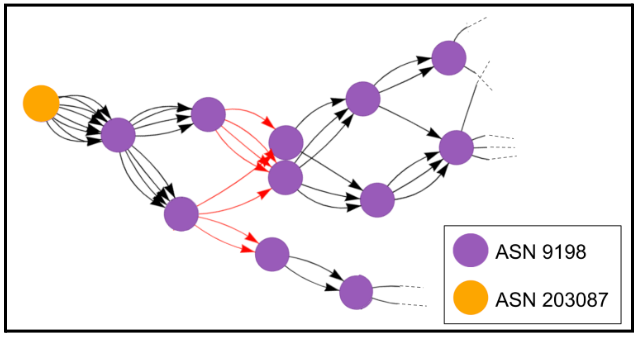

To address these historical limitations, we have developed a general-purpose method to determine device location that we call CenTrace. By monitoring the path between client and endpoint using probes with limited Time to Live (TTL) values, assessing the points of termination or dropped packets, observing the placement of devices in or outside the path from client to endpoint, and extracting related IP addresses, we can determine the network and approximate location of the censoring devices (See Figure 1).

Our findings show that the CenTrace can be used to understand where blocking occurs. In nearly 74% of the blocking observed in all four countries, the “blocking hop” was found to be directly in the path between the client and the endpoint. While a portion of the remaining blocking was observed at the endpoint itself, such cases do not usually represent censorship by states or ISPs.

CenTrace measurements performed in Azerbaijan and Kazakhstan showed that the packet drops were occurring in one of each country’s larger ISPs, providers which are in turn used by many residential ISPs. In Kazakhstan, it was the state-owned ISP, as shown in Figure 2. This finding regarding censorship in “upstream” networks suggests that existing measurement methods such as OONI—which only report results based on the client’s own network—may not provide a complete picture of censorship policies in a region. Notably, in measurements from outside Kazakhstan, more than one third of the packet drops occurred along the route through networks in Russia. This shows that remote censorship measurements of a certain country can be affected by policies in a different country on the path, which has significant implications for censorship measurement research.

In Belarus, our measurements found that devices are deployed closer to the user and inject reset packets terminating the connection. We also observed specialized behavior by some devices in Russia that made them harder to detect.

For an explanation of our methodology and techniques used, see the full paper, Network Measurement Methods for Locating and Examining Censorship Devices.

Dropping, Injection and Blocking

To control online communications and access to content, censors use a variety of methods to disrupt the flow of data packets across the Internet. When restricted content or a request for restricted content is detected, a censor can drop connections along the path. Dropping prevents the original packets from reaching their destination and leaves the origin of the data request (the client) and the endpoint (the server) waiting for confirmation until the connection eventually times out. Injection inserts data into the path that reaches the client before the server’s legitimate response and, in the most common case of a reset (RST) packet, will terminate the connection. Censors can use inject packets that instruct the communicating devices to reset their connection, effectively terminating it, or inject instructions to show a blockpage, such as the ones users see when trying to view content that is prohibited under international copyrights.

2. Probing censorship devices: To date, censorship measurement has relied heavily on known attributes of specific types of censorship devices, using those “fingerprints” to track associated activity. Blockpages have also served as an important vector for measurement, since page content and packet headers injected by censors usually contain identifying information.

Though standard HTTP has always allowed censors to view the data moving between client and server—and inject content such as blockpages into the path—the increasing default to the HTTPS protocol and fully encrypted communication has required censors to broaden their methods. It is also worth noting that blockpages make censorship measurement itself easier, since a dropped or reset connection on its own does not always indicate censorship activity.

In the four studied countries, we found that information from network “banners”, such as a device name or software version, was visible from devices performing censorship, and can help attribute censorship activity, especially when blockpages are not in use.

By probing the potential IP devices found using our CenTrace measurements, we obtained indication of any filtering software running on these devices by running probes on the HTTP(S), SSH, Telnet, FTP, SMTP, and SNMP protocols (wherever open ports made those respective services available). Such protocol information has already been used in efforts to “fingerprint” network devices.

Combining these results, further manual investigation, and data from public fingerprint repositories, we were able to label many devices with filtering technology used (among those that responded to our banner grabs). Of the devices accessible to banner grabs, nearly 40% showed a clear indication of firewall software used for censorship. Many commercial firewall devices were detected, including from popular network device vendors such as Cisco and Fortinet. Our paper notes certain limitations to the measurements we used, including a bias toward devices with open ports that reveal banner information, and the use of cumbersome manual analysis to identify potential IP addresses. We can conclude that banner information is a viable and valuable alternative to blockpages, especially when blockpages are not being used in censorship.

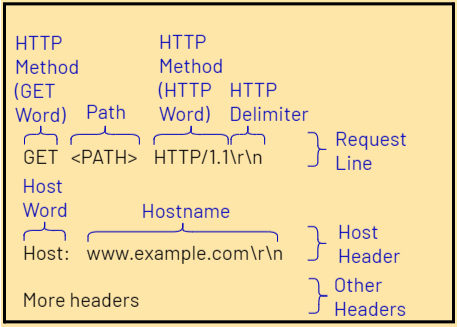

3. Fuzzing censorship devices: In order to collect more information about devices’ behavior and identify their rules and triggers, we developed an HTTP and TLS request fuzzing tool called CenFuzz, which sends modified (or “fuzzed”) versions of regular HTTP and TLS requests and observes how the censorship changes. For example, instead of using the standard HTTP GET method, we attempt to use other methods such as PUT. See Figure 3 for an example of the parts of a HTTP request that can be modified.

Across our measurements in the four countries under study, we find that devices behave differently towards different requests. Providing an alternate HTTP path other than the default ‘/’ evaded blocking 68.72% of the time, and using an HTTP method other than GET evaded censorship 0.44% – 90.58% of the time. For TLS, we observe that certain devices in Russia and Belarus did not censor certain TLS versions and cipher suites. We note in our paper that several of these strategies could work as circumvention methods and retrieve legitimate content from inside a censored network. These initial findings can inform future circumvention efforts.

4. Profiling devices and strategies: Using the results of our traceroutes, probes, and fuzzing measurements, we compiled the different censorship strategies that were observed from different devices and clustered them to observe their proximity to each other.

Our data analysis determined that similar censorship deployments can be identified by a set of attributes—a censorship “fingerprint”. Devices known to have the same manufacturer observed strong positive correlations with similar censorship strategies. The manufacturers for the observed devices included Fortinet, Cisco, and Kerio Control.

In addition to correlations between censorship behaviors and manufacturers, we also observed clusters of similar strategies that correlated with geographical location or Autonomous System (AS), i.e., network location. Taken together, the results show that devices manufactured by the same vendor or those deployed by the same actor exhibit highly similar censorship properties. These properties can be used to help fingerprint devices and identify other censorship activity.

Conclusions and Outlook

Our aim is to democratize in-depth technical investigation of the censorship devices deployed across the world. To help advance the study of censorship devices, we have made our tools and data completely open source. Our tools are already being used by research institutions such as The Citizen Lab to understand the technology behind blocking in many countries and help to advocate against their misuse.

We have already begun to integrate our tools into censorship measurement platforms such as Censored Planet, to help advance mainstream censorship research through more in-depth understanding of underlying censorship technologies. Over time, these expanded approaches to censorship measurement can inform the development of new standards, not only in measurement, but in device manufacturing and deployment, and in the creation of policies and rules.

We offer these findings as raw material and an early version of practices that can be iterated through new research projects across a number of disciplines. A growing community of practice will help not only to interrogate and refine these approaches, but to incentivize manufacturers, software providers and private ISPs to be more conscious, more ethical, less censoring, and less permissive in the creation and dissemination of censorship tools. Vendors and ISPs can also benefit from a better education in the potential uses of their products for inadvertent and unintended censorship and surveillance.

For groups investigating the circumvention of censorship, and the protection of those seeking freedom of expression, transparency and accountability of governments and safety for research communities, we hope this work provides guidance and platform for cooperation and engagement.

Authors and OTF Learning Lab

Authors: Study Team: Ram Sundara Raman (University of Michigan), Mona Wang (Princeton University), Jakub Dalek (The Citizen Lab), Jonathan Mayer (Princeton University), Roya Ensafi (University of Michigan)

OTF Learning Lab: Jed Miller, Jason Aul